SNA with RSiena

1 getting started

Start with clean workspace

rm(list = ls())2 Goal

We have two goals:

- How to make an RSiena object ready to analyze.

- Analyze a (very very) simple network evolution model

3 Custom functions

fpackage.check: Check if packages are installed (and install if not) in R (source).

fsave: Save to processed data in repository

f_pubnets: select scholars and construct directed publication network.

fpackage.check <- function(packages) {

lapply(packages, FUN = function(x) {

if (!require(x, character.only = TRUE)) {

install.packages(x, dependencies = TRUE)

library(x, character.only = TRUE)

}

})

}

fsave <- function(x, file = NULL, location = "./data/processed/") {

ifelse(!dir.exists("data"), dir.create("data"), FALSE)

ifelse(!dir.exists("data/processed"), dir.create("data/processed"), FALSE)

if (is.null(file))

file = deparse(substitute(x))

datename <- substr(gsub("[:-]", "", Sys.time()), 1, 8)

totalname <- paste(location, datename, file, ".rda", sep = "")

save(x, file = totalname) #need to fix if file is reloaded as input name, not as x.

}

fload <- function(filename) {

load(filename)

get(ls()[ls() != "filename"])

}

fshowdf <- function(x, ...) {

knitr::kable(x, digits = 2, "html", ...) %>%

kableExtra::kable_styling(bootstrap_options = c("striped", "hover")) %>%

kableExtra::scroll_box(width = "100%", height = "300px")

}

# this is the most important one. We created it in the previous script

f_pubnets <- function(df_scholars = df, list_publications = publications, discip = "sociology", affiliation = "RU",

waves = list(wave1 = c(2018, 2019, 2020), wave2 = c(2021, 2022, 2023))) {

publications <- list_publications %>%

bind_rows() %>%

distinct(title, .keep_all = TRUE)

df_scholars %>%

filter(affil1 == affiliation | affil2 == affiliation) %>%

filter(discipline == discip) -> df_sel

networklist <- list()

for (wave in 1:length(waves)) {

networklist[[wave]] <- matrix(0, nrow = nrow(df_sel), ncol = nrow(df_sel))

}

publicationlist <- list()

for (wave in 1:length(waves)) {

publicationlist[[wave]] <- publications %>%

filter(gs_id %in% df_sel$gs_id) %>%

filter(year %in% waves[[wave]]) %>%

select(author) %>%

lapply(str_split, pattern = ",")

}

publicationlist2 <- list()

for (wave in 1:length(waves)) {

publicationlist2[[wave]] <- publicationlist[[wave]]$author %>%

# lowercase

lapply(tolower) %>%

# Removing diacritics

lapply(stri_trans_general, id = "latin-ascii") %>%

# only last name

lapply(word, start = -1, sep = " ") %>%

# only last last name

lapply(word, start = -1, sep = "-")

}

for (wave in 1:length(waves)) {

# let us remove all publications with only one author

remove <- which(sapply(publicationlist2[[wave]], FUN = function(x) length(x) == 1) == TRUE)

publicationlist2[[wave]] <- publicationlist2[[wave]][-remove]

}

for (wave in 1:length(waves)) {

pubs <- publicationlist2[[wave]]

for (ego in 1:nrow(df_sel)) {

# which ego?

lastname_ego <- df_sel$lastname[ego]

# for all publications

for (pub in 1:length(pubs)) {

# only continue if ego is author of pub

if (lastname_ego %in% pubs[[pub]]) {

aut_pot <- which.max(pubs[[pub]] %in% lastname_ego)

# only continue if ego is first author of pub

if (aut_pot == 1) {

# check all alters/co-authors

for (alter in 1:nrow(df_sel)) {

# which alter

lastname_alter <- df_sel$lastname[alter]

if (lastname_alter %in% pubs[[pub]]) {

networklist[[wave]][ego, alter] <- networklist[[wave]][ego, alter] + 1

}

}

}

}

}

}

}

return(list(df = df_sel, network = networklist))

}4 packages

RSiena: what do you think? :-)

packages = c("RSiena", "tidyverse", "stringdist", "stringi")

fpackage.check(packages)5 input

df <- fload("./data/processed/20230621df_complete.rda")

publications <- fload("./data/processed/20230621list_publications_jt.rda")6 RU - sociology

output <- f_pubnets()

df_soc <- output[[1]]

df_network <- output[[2]]6.1 Step 1: define data

6.1.1 dependent variable

# let us check the number of waves

length(df_network)#> [1] 2wave1 <- df_network[[1]]

wave2 <- df_network[[2]]

# let us put the diagonal to zero

diag(wave1) <- 0

diag(wave2) <- 0

# we want a binary tie (not a weighted tie)

wave1[wave1 > 1] <- 1

wave2[wave2 > 1] <- 1

# put the nets in an array

net_soc_array <- array(data = c(wave1, wave2), dim = c(dim(wave1), 2))

# dependent

net <- sienaDependent(net_soc_array)6.1.2 independent variables

# gender

gender <- as.numeric(df_soc$gender == "female")

gender <- coCovar(gender)Note that you can and must add a lot more relevant independent variables.

mydata <- sienaDataCreate(net, gender)6.2 Step 2: create effects structure

myeff <- getEffects(mydata)

# effectsDocumentation(myeff)6.3 Step 3: get initial description

ifelse(!dir.exists("results"), dir.create("results"), FALSE)#> [1] FALSEprint01Report(mydata, modelname = "./results/soc_init")And have a look at it!!

What do we learn from this file?

6.4 Step 4: specify model

This should be both empirically and theoretically motivated. Most importantly, hopefully you have already thought about this step when you formulated your hypotheses and even before you constructed your data.

Let us discuss the model below.

What do these effects mean?

Why did we include these effects (and not others)?

myeff <- includeEffects(myeff, isolateNet, inPop, outAct, inAct, transTrip) #we know that quite a lot of staff has not published with someone else#> effectName include fix test initialValue parm

#> 1 transitive triplets TRUE FALSE FALSE 0 0

#> 2 indegree - popularity TRUE FALSE FALSE 0 0

#> 3 outdegree - activity TRUE FALSE FALSE 0 0

#> 4 indegree - activity TRUE FALSE FALSE 0 0

#> 5 network-isolate TRUE FALSE FALSE 0 0myeff <- includeEffects(myeff, sameX, egoX, altX, interaction1 = "gender")#> effectName include fix test initialValue parm

#> 1 gender alter TRUE FALSE FALSE 0 0

#> 2 gender ego TRUE FALSE FALSE 0 0

#> 3 same gender TRUE FALSE FALSE 0 0What structural effects would we normally want to include?

6.5 Step5 estimate

myAlgorithm <- sienaAlgorithmCreate(projname = "soc_init")

(ans <- siena07(myAlgorithm, data = mydata, effects = myeff))

# (the outer parentheses lead to printing the obtained result on the screen) if necessary, estimate

# further

(ans <- siena07(myAlgorithm, data = mydata, effects = myeff, prevAns = ans, returnDeps = TRUE))ans#> Estimates, standard errors and convergence t-ratios

#>

#> Estimate Standard Convergence

#> Error t-ratio

#>

#> Rate parameters:

#> 0 Rate parameter 4.1454 ( 1.0037 )

#>

#> Other parameters:

#> 1. eval outdegree (density) -2.4708 ( 0.7370 ) -0.1259

#> 2. eval reciprocity 2.3635 ( 0.6552 ) -0.0341

#> 3. eval transitive triplets 0.7667 ( 0.4227 ) -0.1454

#> 4. eval indegree - popularity 0.2207 ( 0.0623 ) -0.1125

#> 5. eval outdegree - activity 0.0194 ( 0.1030 ) -0.1130

#> 6. eval indegree - activity -0.4241 ( 0.3033 ) -0.1311

#> 7. eval network-isolate 2.4386 ( 1.1223 ) 0.0809

#> 8. eval gender alter -0.3887 ( 0.2977 ) 0.1094

#> 9. eval gender ego 0.1515 ( 0.3344 ) 0.0128

#> 10. eval same gender 0.1572 ( 0.2825 ) -0.0639

#>

#> Overall maximum convergence ratio: 0.1886

#>

#>

#> Total of 2876 iteration steps.Assignment 5: Note, this is a challening one, intended for you folks who have been bored so far. ;-) Can you think of influence effects?

5a: scrape (or manually collect) the current H-index for our scholars and add it to your dataframe.

5b: Formulate a hypotheses with respect to authors influencing each others H-index

5b: based on the two H-index scores over time, and your hypo, define a behavioral dependent variable.

5e: update your model, including now a network and behavioral dependent variable and respective statistics.

5f: estimate model

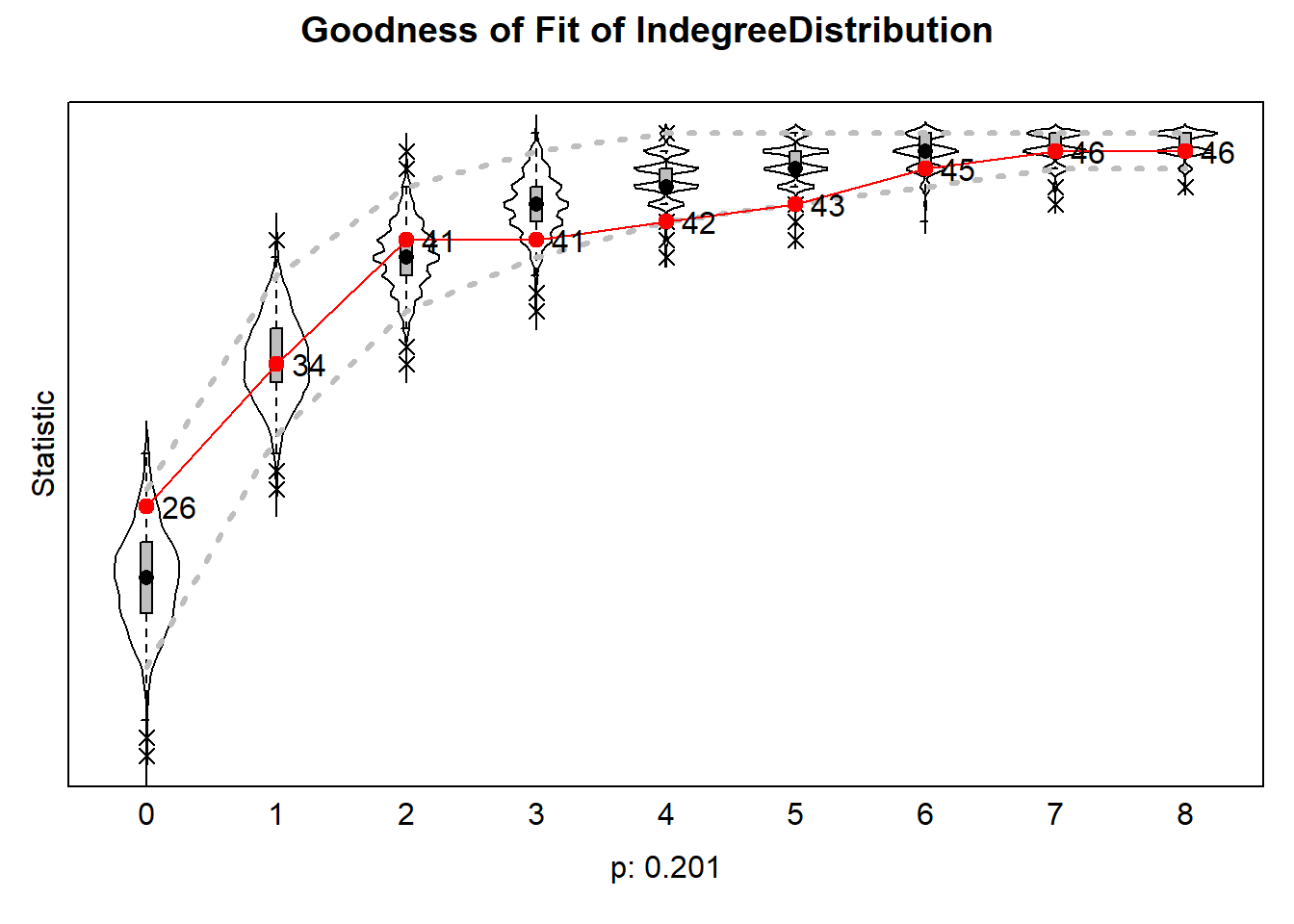

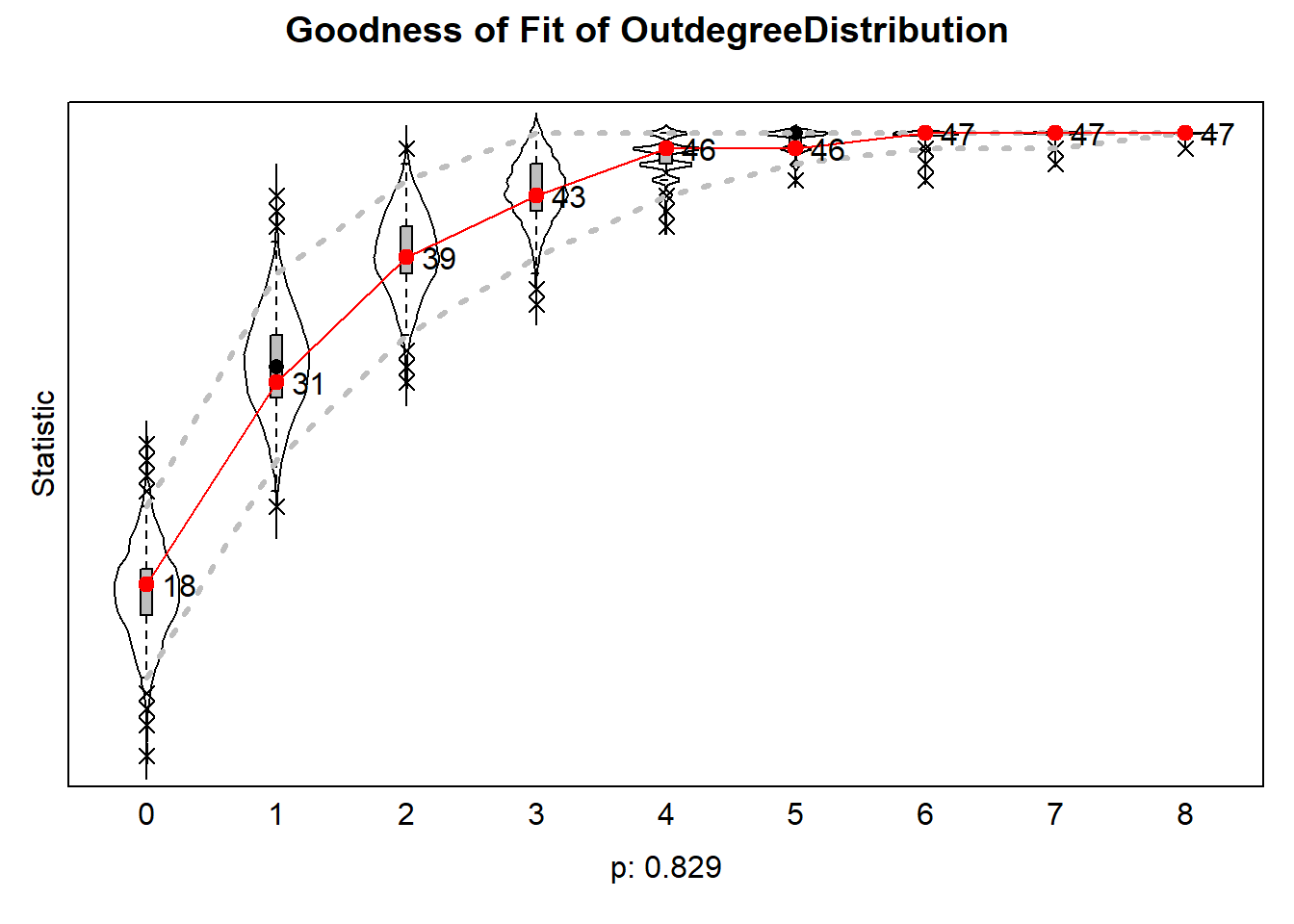

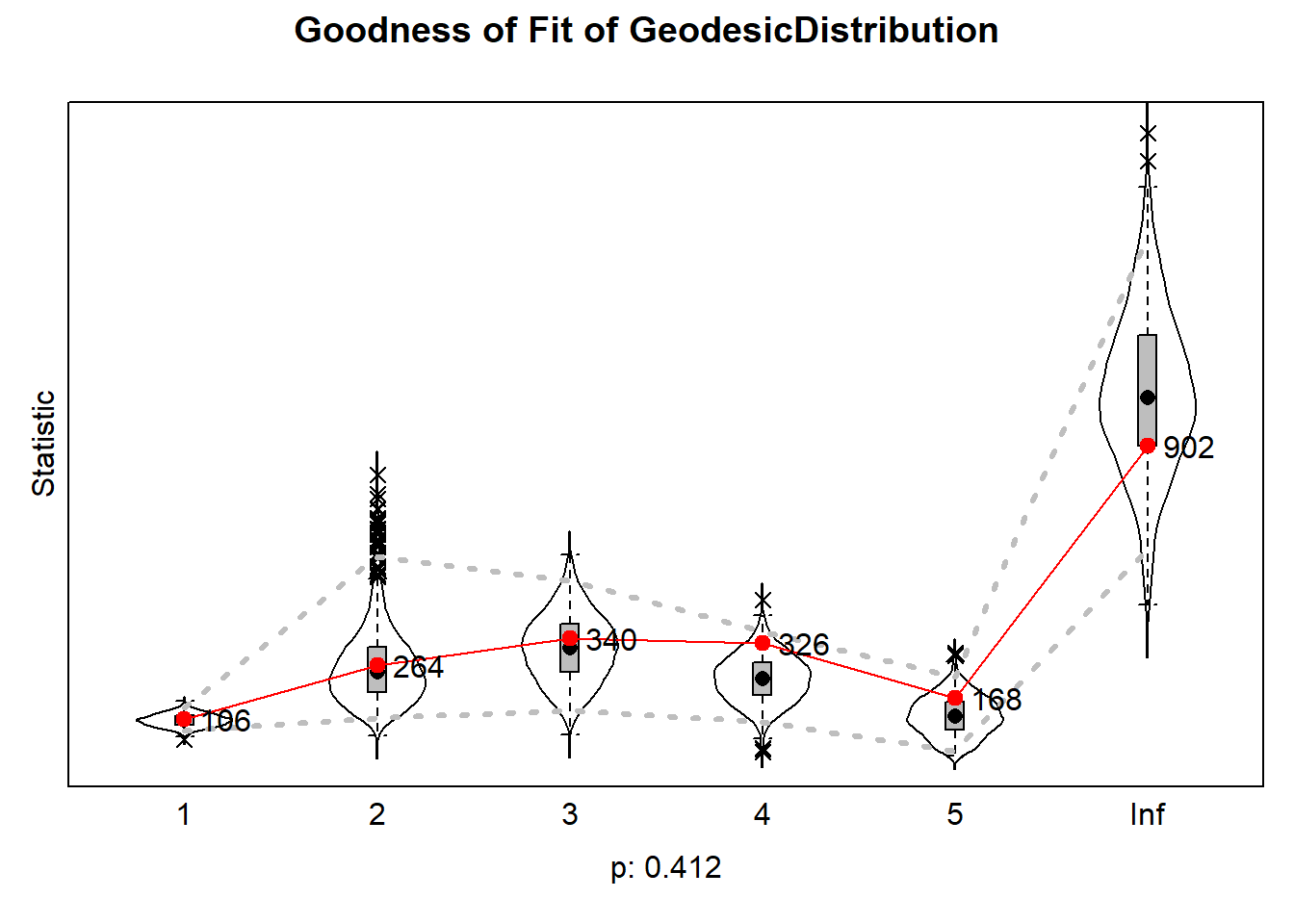

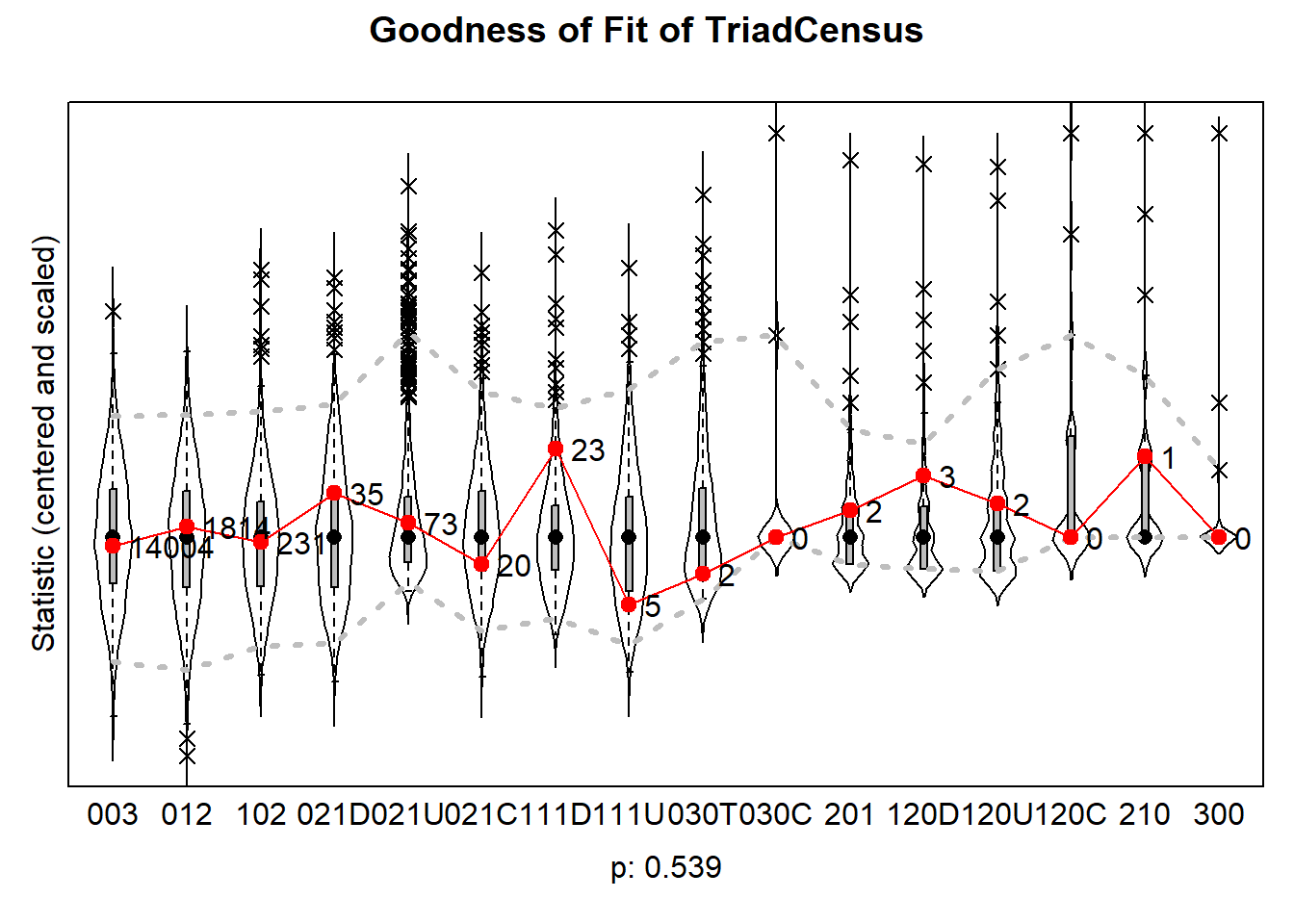

6.6 Step 6: GOF

Here, scripts are shown that can be used to present violin plots representing how well the simulations of our SIENA models capture the distribution of features of the dependent variable(s) (i.e., networks and ‘behavior’) that were not directly modeled, but for which a good fit between model and data is desirable.

Background reading: Lospinoso & Snijders (2019)

6.6.1 Background

The goal of GOF-testing is to ensure that our estimated SIENA model accurately represents the observed data of the dependent variable, based on so-called auxiliary statistics, such as the distribution of outdegrees, indegrees, reciprocity, triadic configurations, geodesic distances, behavior traits, edgewise similarity, etc. This list is not exhaustive and should be tailored to the specific research question.

The assessment of fit involves comparing observed network features to their expected values in the estimated distribution of networks, derived from a large number of simulations (saved when returnDeps=TRUE in the siena07-call). If the assessment reveals a poor fit, it becomes necessary to propose model elaborations to improve the fit between the model and data.

Although one might possess theoretical notions about remediation, the complex nature of networks introduces a vast array of potential effects to consider (as shown by the large list of effects in the RSiena manual). In many instances, relying solely on theory and experience is insufficient to confidently propose the effects that ought to be incorporated for better model fit. Also, experimenting with various model specifications can be time-consuming.

RSiena provides a computationally efficient predictor for assessing the fit if the model were to be extended by specific additional effects. This estimator can be evaluated using only ingredients calculated already for the method-of-moments estimation of the restricted model (thus, testing an effect without estimating it, by setting test=TRUE and fix=TRUE in the includeEffects-call).

The results can be plotted which then produce violin plots, which present the distribution of the statistic as a combination of a box plot and a smooth approximation to the density (by a kernel density estimate), with the observed values superimposed.

The p-values for sienaGOF compare, in the space of outcomes of the auxiliary statistic, the position of the observed data to the cloud of points formed by the simulated data sets that correspond to the estimated model. This comparison is with respect to the ‘distance’ from the center of

the cloud of points, where ‘distance’ is between quotation marks because it is the Mahalanobis distance, which takes into account the correlations and different variances of the components of the auxiliary statistic.

A very small value of p indicates poor fit. The customary value of p = 0.05 may be used as a threshold determining whether the fit is adequate, but this threshold is of even less importance here than it is in the case of regular hypothesis testing. Concluding, if p = 0, then with respect to the auxiliary statistic the fit is poor; it might be rather poor or extremely poor, and you do not know how extreme it is.

For more info, we refer to the article by Lospinoso & Snijders (2019) and the RSiena manual section 5.14.

6.6.2 Define GOF-auilliary

Now we define some functions from sienaGOF-auxiliary.

# see here: ?'sienaGOF-auxiliary'

# The geodesic distribution is not available from within RSiena, and therefore is copied from the

# help page of sienaGOF-auxiliary:

# GeodesicDistribution calculates the distribution of non-directed geodesic distances; see

# ?sna::geodist The default for \code{levls} reflects the usual phenomenon that geodesic distances

# larger than 5 do not differ appreciably with respect to interpretation. Note that the levels of

# the result are named; these names are used in the \code{plot} method.

GeodesicDistribution <- function(i, data, sims, period, groupName, varName, levls = c(1:5, Inf), cumulative = TRUE,

...) {

x <- networkExtraction(i, data, sims, period, groupName, varName)

require(sna)

a <- sna::geodist(symmetrize(x))$gdist

if (cumulative) {

gdi <- sapply(levls, function(i) {

sum(a <= i)

})

} else {

gdi <- sapply(levls, function(i) {

sum(a == i)

})

}

names(gdi) <- as.character(levls)

gdi

}

# The following function is taken from the help page for sienaTest

testall <- function(ans) {

for (i in which(ans$test)) {

sct <- score.Test(ans, i)

cat(ans$requestedEffects$effectName[i], "\n")

print(sct)

}

invisible(score.Test(ans))

}6.6.3 apply sienaGOF

Now, we can go to applying sienaGOF to our data.

Goodness-of-fit tests based on various auxiliary statistics:

gofi0 <- sienaGOF(ans, IndegreeDistribution, verbose = FALSE, join = TRUE, varName = "net")

gofo0 <- sienaGOF(ans, OutdegreeDistribution, verbose = FALSE, join = TRUE, varName = "net")

gof0.gd <- sienaGOF(ans, GeodesicDistribution, cumulative = FALSE, verbose = FALSE, join = TRUE, varName = "net")

gof0.tc <- sienaGOF(ans, TriadCensus, verbose = FALSE, join = TRUE, varName = "net")

# ?sienaGOF6.6.4 GOF plots initial model

6.6.4.1 indegree distribution

plot(gofi0)

6.6.4.2 outdegree distribution

plot(gofo0)

6.6.4.3 geodesic distances

plot(gof0.gd)

6.6.4.4 triad census

plot(gof0.tc, center = TRUE, scale = TRUE)

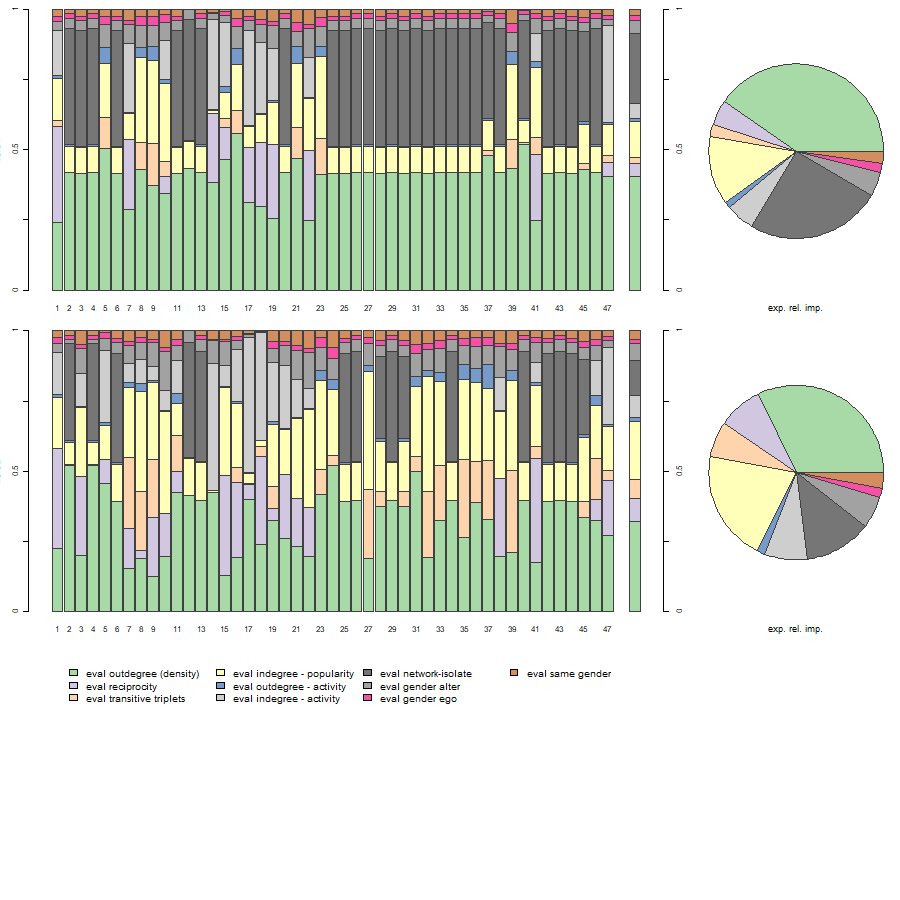

6.7 Step 7 (optional): Relative Influence

Here, scripts are shown that can be used to calculate the expected relative importance of our effects.

Background reading: Indlekofer & Brandes (2013)

6.7.1 Background

Until now, the interpretation of estimated effects in our SIENA models has been limited to testing their statistical significance, which determines whether an effect plays a role in the evolution of the network (using t-statistics). But we do not yet know how these effects fare against each other.

There are four issues when extrapolating the size of estimated parameters to their relative importance in SIENA models:

Explanatory statistics have different scales (e.g., one micro-step may increase the number of reciprocated ties by at most 1 but may result in up to 2(N-2) new transitive triplets).

Explanatory variables are often correlated, making it difficult to establish causality (e.g., a tie abridging a two-path may yield a new transitive triplet, while at the same time, a reciprocated tie).

Multiple and complex choice sets exist, where network effects influence the probabilities of several alternative choices, and these effects are themselves influenced by a combination of several effects. This interdependence makes it challenging to assess the individual contribution of each effect to actor decisions.

The data undergoes substantial unobserved changes over time, and the size of parameter estimates is strongly dependent on the structure of the evolving network data. The absence of certain network configurations can render specific effects irrelevant in decision-making processes at certain points in time (e.g., if an ego has no incoming ties, he has no opportunity to reciprocate a tie, making that the

reciprocityeffect cannot influence his decision).

To compare the relative importance of effects within a model, among different models, or across different datasets, we require a measure that specifically focuses on the extent to which effects influence actor decision probabilities.

This is where the concept of ‘Relative Importance’ (RI) measures comes into play. This measure reflects the extent that estimated model parameters affect change probabilities in network decision probabilities. They should be interpreted as the influence of effects on network changes relative to one another. The importance of an effect is estimated based on the extent to which network micro-steps would have differed if this effect were to be omitted. Probabilities for tie changes from the perspective of each actor are calculated using the fitted model parameters. Subsequently, each parameter is fixed to 0 and the change probabilities are recalculated. The influence of an effect on network (or: behavior) micro-steps is evaluated based on the magnitude of the difference in the distribution of change probabilities with the particular effect present versus absent. These differences are normalized so that their sum is 1 for each actor, and subsequently averaged across actors.

For more info, we refer to the article by Indlekofer & Brandes (2013) and the RSiena manual section 13.5.1.

6.7.2 SienaRI

Now, we can go to applying sienaRI to co-publishing network data.

# get parameters

theta.eval <- ans$theta

# and effects

myeff.eval <- ans$effects

# use sienaRI()

RI <- sienaRI(data = mydata, theta = theta.eval, algorithm = myAlgorithm, effects = myeff.eval)And plot it.

plot(RI, addPieChart = TRUE)

The bar charts display the relative impacts of effects of our model on individual actor decisions for all observations. The last bar chart in each row, as well as the pie chart, display expected relative importance of included effects for the next step, averaged across actors.

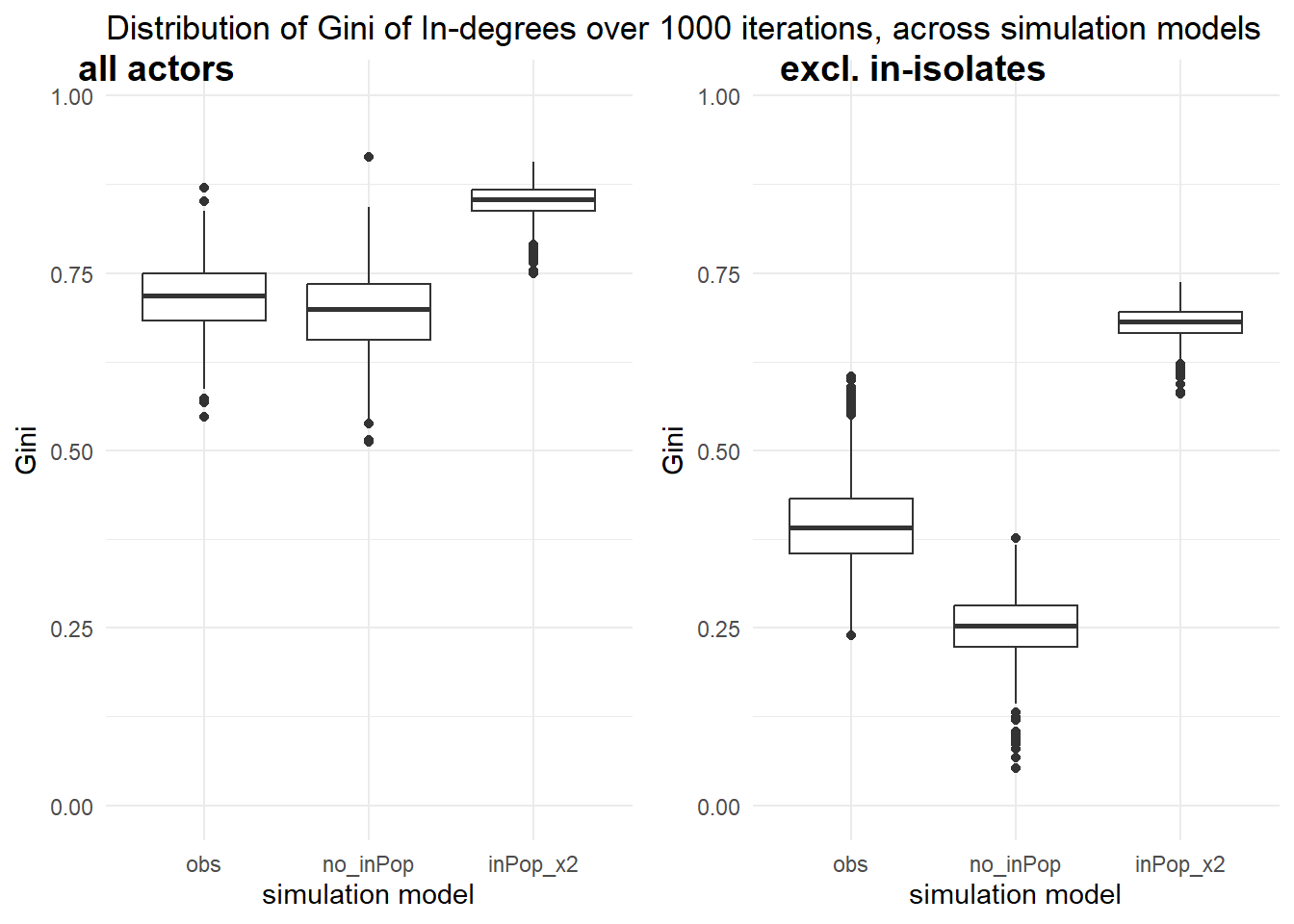

6.8 Step 8 (optional): Micro-Macro (SIENA as ABM)

To gain a better understanding of how consequential our (selection) effects of interest at the micro-level are for social network properties at the macro-level, we can use our estimated SAOMs as empirically calibrated agent-based simulation models.

Background reading: Snijders & Steglich (2015).

We set initial effects values of our simulation models based on our empirically estimated model (ans) and we fix the effects at these values. We specify 2 additional models:

- no indegree-popularity (inPop) model

- stronger indegree-popularity (inPop) model

Our macro- / network outcome of interest is the skewness of the in-degree distribution. We measure this through the Gini index. We will extract the Gini for the in-degrees from each simulation run.

#make new effects objects for simulation models

myeff_obs <- myeff

#set initial values based on estimated model

myeff_obs <- setEffect(myeff_obs, density, initialValue = ans$theta[which(ans$effects$shortName == "density")])#> effectName include fix test initialValue parm

#> 1 outdegree (density) TRUE FALSE FALSE -2.47081 0myeff_obs <- setEffect(myeff_obs, recip, initialValue = ans$theta[which(ans$effects$shortName == "recip")])#> effectName include fix test initialValue parm

#> 1 reciprocity TRUE FALSE FALSE 2.36353 0myeff_obs <- setEffect(myeff_obs, transTrip, initialValue = ans$theta[which(ans$effects$shortName == "transTrip")])#> effectName include fix test initialValue parm

#> 1 transitive triplets TRUE FALSE FALSE 0.76667 0myeff_obs <- setEffect(myeff_obs, inPop, initialValue = ans$theta[which(ans$effects$shortName == "inPop")])#> effectName include fix test initialValue parm

#> 1 indegree - popularity TRUE FALSE FALSE 0.22069 0myeff_obs <- setEffect(myeff_obs, outAct, initialValue = ans$theta[which(ans$effects$shortName == "outAct")])#> effectName include fix test initialValue parm

#> 1 outdegree - activity TRUE FALSE FALSE 0.01937 0myeff_obs <- setEffect(myeff_obs, inAct, initialValue = ans$theta[which(ans$effects$shortName == "inAct")])#> effectName include fix test initialValue parm

#> 1 indegree - activity TRUE FALSE FALSE -0.42412 0myeff_obs <- setEffect(myeff_obs, isolateNet, initialValue = ans$theta[which(ans$effects$shortName == "isolateNet")])#> effectName include fix test initialValue parm

#> 1 network-isolate TRUE FALSE FALSE 2.43861 0myeff_obs <- setEffect(myeff_obs, altX, interaction1 = "gender", initialValue = ans$theta[which(ans$effects$shortName == "altX")])#> effectName include fix test initialValue parm

#> 1 gender alter TRUE FALSE FALSE -0.38869 0myeff_obs <- setEffect(myeff_obs, egoX, interaction1 = "gender", initialValue = ans$theta[which(ans$effects$shortName == "egoX")])#> effectName include fix test initialValue parm

#> 1 gender ego TRUE FALSE FALSE 0.15153 0myeff_obs <- setEffect(myeff_obs, sameX, interaction1 = "gender", initialValue = ans$theta[which(ans$effects$shortName == "sameX")])#> effectName include fix test initialValue parm

#> 1 same gender TRUE FALSE FALSE 0.15722 0#fix effects at this value

myeff_obs$fix[myeff_obs$include == TRUE] <- TRUE

#additional models:

myeff_no <- myeff_high <- myeff_obs

#adjust inPop effect

myeff_no <- setEffect(myeff_obs, inPop, initialValue = 0, fix = TRUE)#> effectName include fix test initialValue parm

#> 1 indegree - popularity TRUE TRUE FALSE 0 0myeff_high <- setEffect(myeff_obs, inPop, initialValue = ans$theta[which(ans$effects$shortName == "inPop")] * 2, fix = TRUE)#> effectName include fix test initialValue parm

#> 1 indegree - popularity TRUE TRUE FALSE 0.44137 0#set up the simulation settings

nIter <- 1000 # number of iterations

sim_model <- sienaAlgorithmCreate(

projname = 'simulation',

cond = FALSE,

useStdInits = FALSE, nsub = 0,

n3 = nIter,

seed=242452, # seed for replication

simOnly = TRUE)#> If you use this algorithm object, siena07 will create/use an output file simulation.txt .#make vector to store gini across models (observed, no inPop, high inPop)

#we also make vectors to calculate gini excluding the in-isolates

gini_obs <- gini_no <- gini_high <- gini_obs2 <- gini_no2 <- gini_high2 <- rep(0, nIter)

# simulation using estimated parameters

sim_ans_obs <- siena07(sim_model, # simulation settings

data = mydata, # data

effects = myeff_obs,# defined effects and set parameters

returnDeps = TRUE) # return simulated networks

sim_ans_no <- siena07(sim_model, # simulation settings

data = mydata, # data

effects = myeff_no,# defined effects and set parameters

returnDeps = TRUE) # return simulated networks

sim_ans_high <- siena07(sim_model, # simulation settings

data = mydata, # data

effects = myeff_high,# defined effects and set parameters

returnDeps = TRUE) # return simulated networks

#extract gini from simulation runs

#no. of actors

n <- length(mydata$nodeSets[[1]])

#observed model

for (i in 1:nIter) {

#create empty adjacency matrix

adj <- matrix(0, n, n)

#shorter notation for edge list for iteration i

edges <- sim_ans_obs$sims[[i]][[1]][[1]][[1]]

#The numbering is as follows: first the number of the simulation run (i); then the number of the group (1 in the usual case of single-group data structures); then the number of the dependent variable (here 1, because it is supposed that there only is a dependent network); then the period number (i.e., wave number minus 1).

#str(sim_ans_high$sims[[i]])

#put edge values in desired place

adj[edges[, 1:2]] <- edges[, 3]

#count number of indegrees

indegs <- colSums(adj)

gini_obs[i] <- DescTools::Gini(indegs)

#exclude the 0s

gini_obs2[i] <- DescTools::Gini(indegs[!indegs==0])

}

#no inPop model

for (i in 1:nIter) {

#create empty adjacency matrix

adj <- matrix(0, n, n)

#shorter notation for edge list for iteration i

edges <- sim_ans_no$sims[[i]][[1]][[1]][[1]]

#put edge values in desired place

adj[edges[, 1:2]] <- edges[, 3]

#count number of indegrees

indegs <- colSums(adj)

gini_no[i] <- DescTools::Gini(indegs)

#exclude the 0s

gini_no2[i] <- DescTools::Gini(indegs[!indegs==0])

}

#high inPop model

for (i in 1:nIter) {

#create empty adjacency matrix

adj <- matrix(0, n, n)

#shorter notation for edge list for iteration i

edges <- sim_ans_high$sims[[i]][[1]][[1]][[1]]

#put edge values in desired place

adj[edges[, 1:2]] <- edges[, 3]

#count number of indegrees

indegs <- colSums(adj)

gini_high[i] <- DescTools::Gini(indegs)

#exclude the 0s

gini_high2[i] <- DescTools::Gini(indegs[!indegs==0])

}

plotdata <- data.frame(condition = c(rep("obs", nIter), rep("no_inPop", nIter), rep("inPop_x2", nIter)),

gini = c(gini_obs, gini_no, gini_high))

plotdata$condition <- factor(plotdata$condition, levels = c("obs", "no_inPop", "inPop_x2"))

#exclude in-isolates

plotdata2 <- data.frame(condition = c(rep("obs", nIter), rep("no_inPop", nIter), rep("inPop_x2", nIter)),

gini = c(gini_obs2, gini_no2, gini_high2))

plotdata2$condition <- factor(plotdata$condition, levels = c("obs", "no_inPop", "inPop_x2"))

p1 <- ggplot(plotdata, aes(x = condition, y = gini)) +

geom_boxplot() +

labs(title = "Distribution of Gini of In-degrees over 1000 iterations, across simulation models",

x = "simulation model",

y = "Gini") +

ylim(0,1) +

theme_minimal()

p2 <- ggplot(plotdata2, aes(x = condition, y = gini)) +

geom_boxplot() +

labs(x = "simulation model",

y = "Gini") +

ylim(0,1) +

theme_minimal()

ggpubr::ggarrange(p1,p2, align = "h", labels = c("all actors", "excl. in-isolates"), vjust = 3)

7 THE END

YES, you made it until the end!.

Congrats. Time for drinks.

References

Copyright © 2024 Jochem Tolsma